Technical Description

Overview

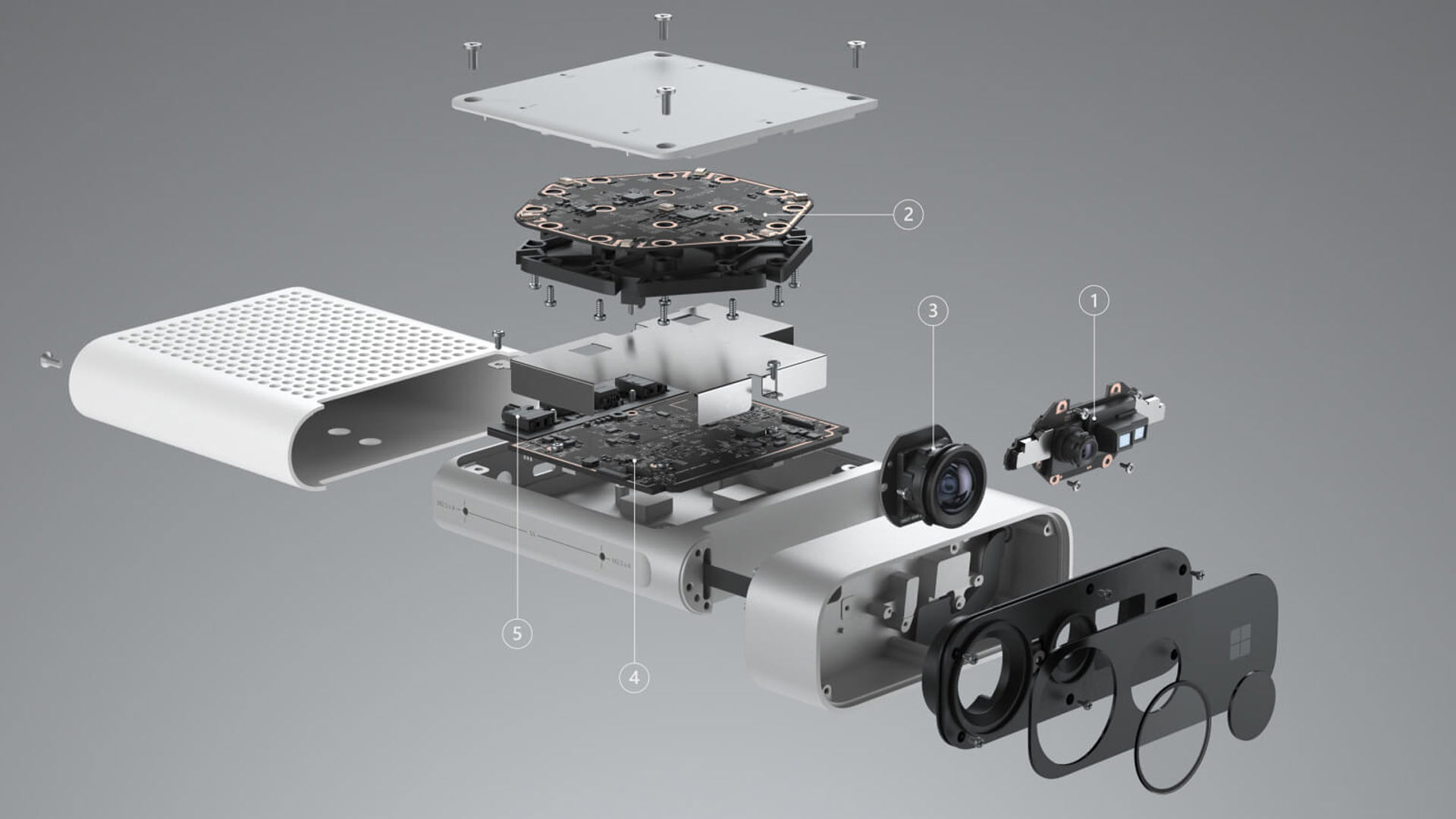

We will have an Azure at one end of the room. They will then be able to track the person in real time using a blob tracking program in Unity. The data that gets collected will then send the x, y coordinates of the person to the headphones. Once Unity has these coordinates, it will relay the sound node that is closest to the person through bluetooth headphones. These sound nodes will be placed throughout the room using sound files that have been created in FL Studios. Unity will play a specific sound when the person gets close to them or gets into a certain location in the room. Once the device and program are talking to each other, the person will then be submerged into a sound heavy experience that they can explore.

Sound Files

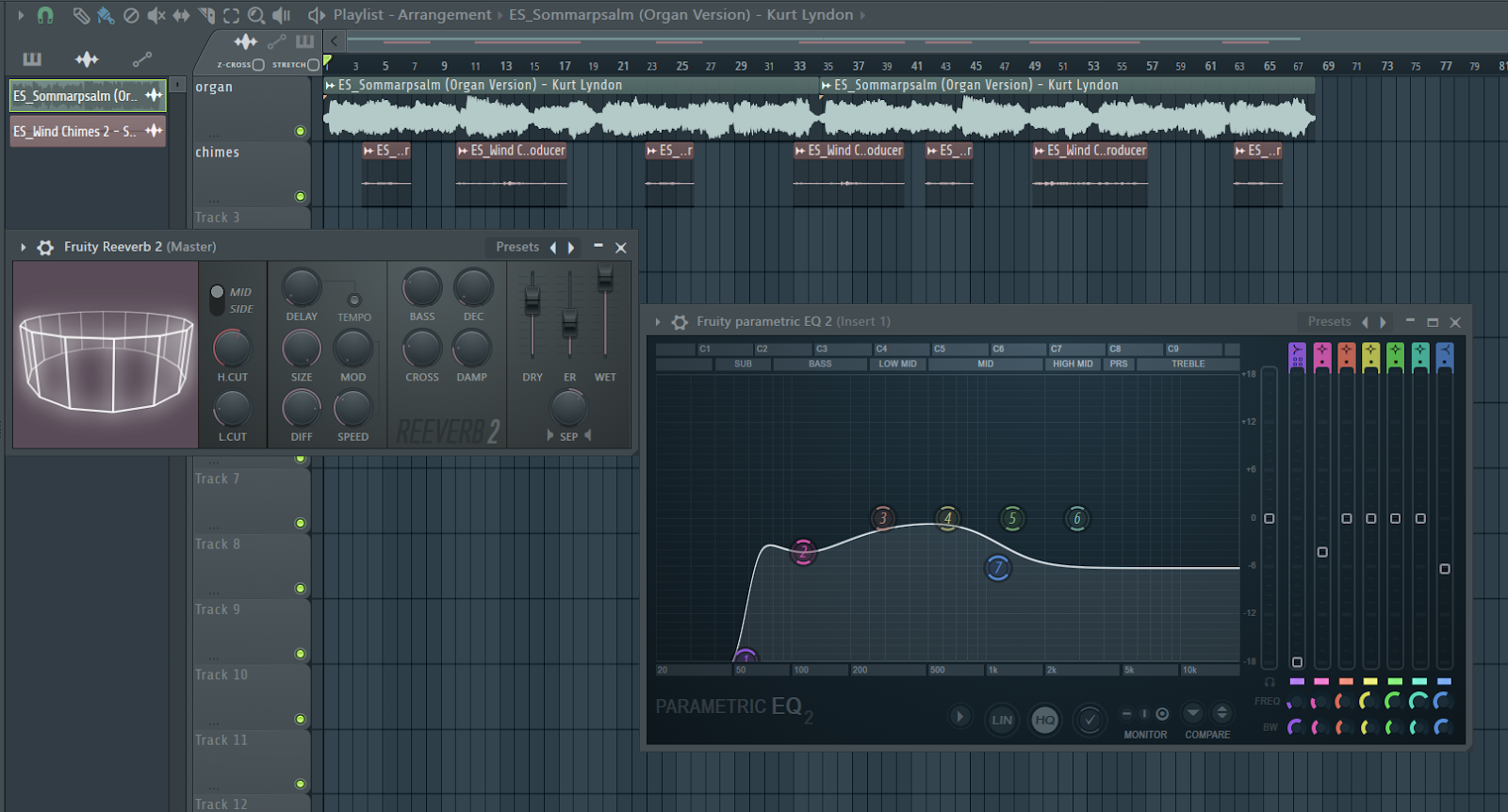

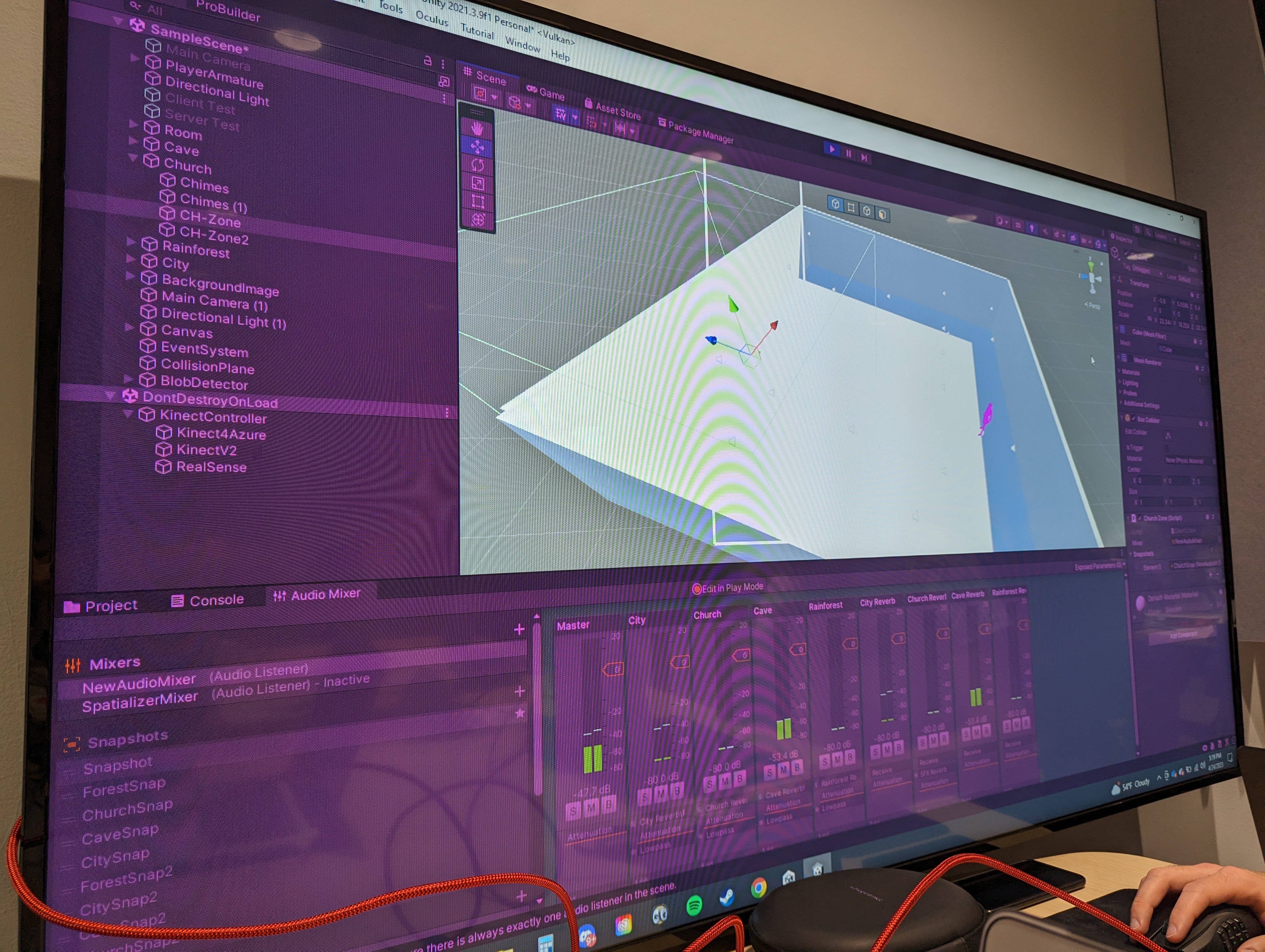

For the sound design, I gathered sound effects from Epidemic Sounds, an online royalty free sound library. We decided as a group that we wanted center sounds, such as main ambience sounds, as well as “satellite” sounds. The center sounds would be the base sound the participant hears in each quadrant of the sound room, and the satellite sounds would be certain sounds you can hear by walking around the specific quadrant. I began with the city, thinking about what sounds could fit into this space. I gathered sounds for street ambience, cars passing by, an ice cream vendor, and someone walking in high heels. Together, I mixed these sounds to have continuity in the reverb and equalization. The “street ambience” sound effect was the center sound for the quadrant. The same was done with the cave sounds, where dripping water and a low hum were placed in the same reverberant space. The low hum was the center sound for the quadrant. The dripping of the water was edited to match the rhythm of the person walking in high heels in the city to make the transition very smooth. The church did not have a lot of variance in sounds, as it is a solemn place. I went with an organ performance of a classic church piece called Sommarpsalm, performed by Kurt Lyndon, to be the center sound for the quadrant. I also went with wind chimes to really drive the point of a dreamy, holy place. Finally, the rainforest sound design was built around a forest ambience sound clip as the center sound. I added a stream, the sound of birds, and a bit of rain. Each of these sounds were implemented into the Unity project separately. In Unity, we created audio source objects, and used the center sounds to fully cover the specific quadrant that the user is in by making the size of the audio source node wide enough to cover the quadrant. The satellite sounds were placed around the center sounds in smaller nodes. This means the user could step into a quadrant, hear the main ambient noise, and continue walking around the quadrant to find new sounds. After each sound was placed, we used the Unity Audio Mixer to work on blending each quadrant together. With the mixer, we created channels for each quadrant (City mixer channel, Church mixer channel, etc…) as well as a reverb bus for each. This was so we could control the reverb tails of a certain quadrant separately from the dry sound. Snapshots are a tool in Unity that allow for the creation of presets that can be triggered with box colliders. We used snapshots to create audio presets for each quadrant, to make the quadrants blend better. For example, when the participants walk into the cave quadrant, it triggers a snapshot called “CaveSnap” that lowers the volume of each other quadrant's channels, not including the reverb buses. It also lowered the threshold of a low pass filter on each of the other quadrants, to give an effect of every other sound being drowned out by the walls of the cave. We also had an inner box trigger inside of each quadrant that was smaller than the outside box trigger. This inside trigger activated a second snapshot that would lower the reverb buses. This was done to give the effects of the reverb carrying over from one quadrant to another, and as the user gets closer to the middle of the new quadrant, that reverb dies down. As stated, this was all done to blend each quadrant better in with each other, and have a more cohesive space in full.

Coding and Unity

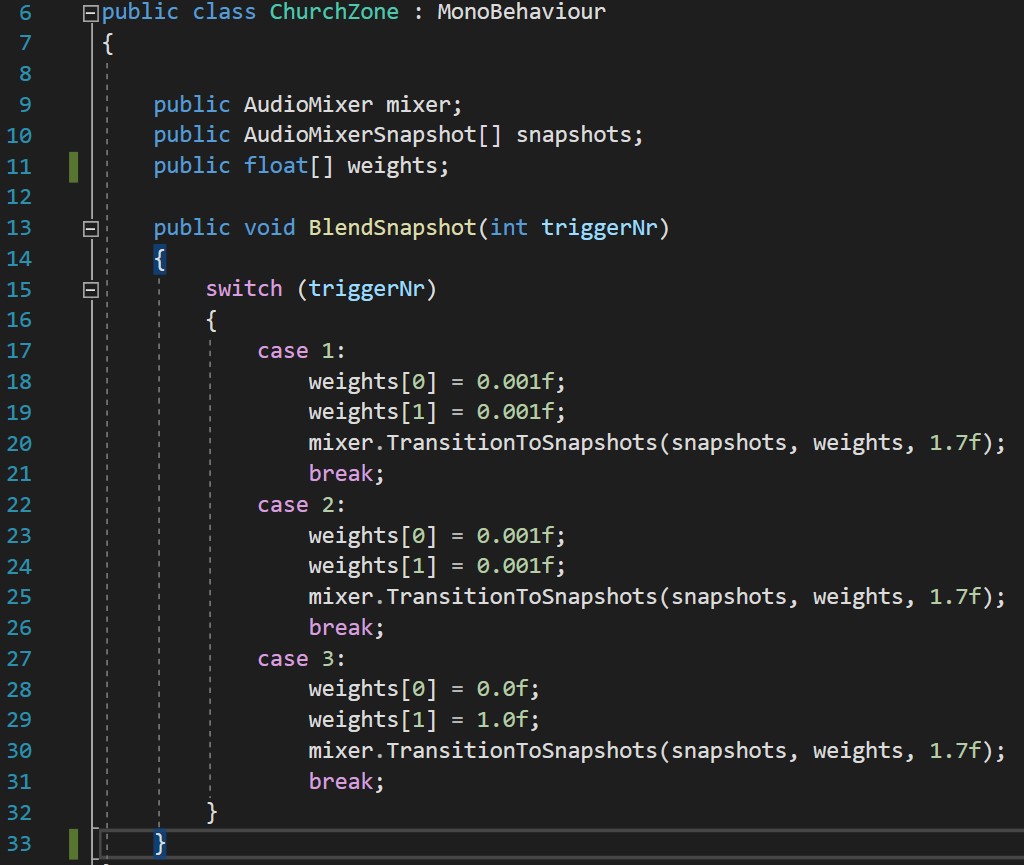

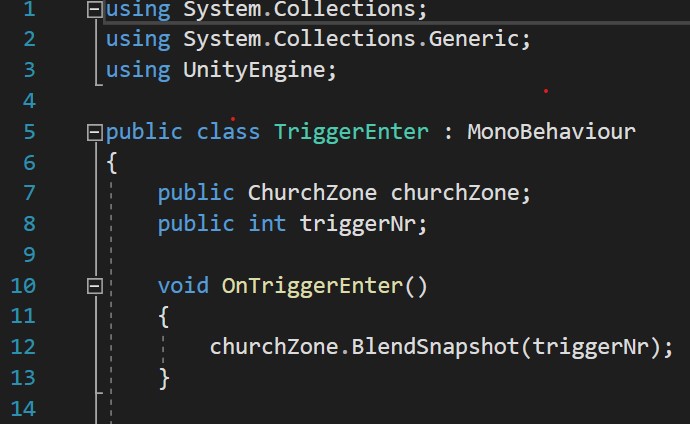

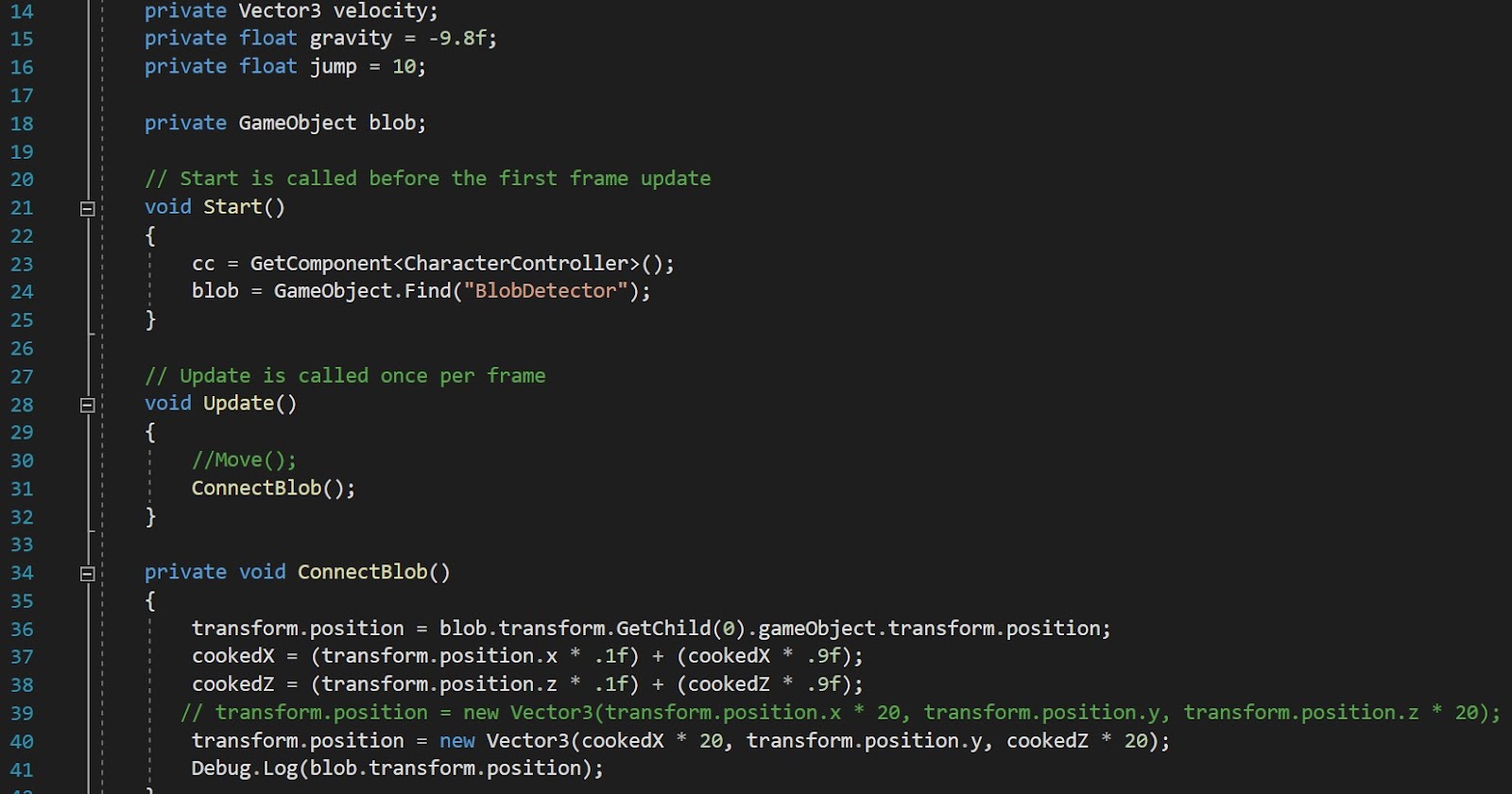

We use snapshots inside of Unity to control the different Zones and apply effects to the different areas. We had to make one script to trigger when the player enters the area, and another script to take that trigger and turn on a different snapshot depending on where the player had just entered. To track the user we used the Azure’s blob tracking example and modified it for what we needed. We had a basic character controller for where the person would be in the room. We obtained the location of the blob and put its coordinates onto the character controller, so the controller would move with the person. The movement was slow at first so we had to multiple its movement to be able to cover a larger distance. The last thing we did to the Controller was average the movement so it would be a smoother experience.